When Game Engines Meet Hollywood

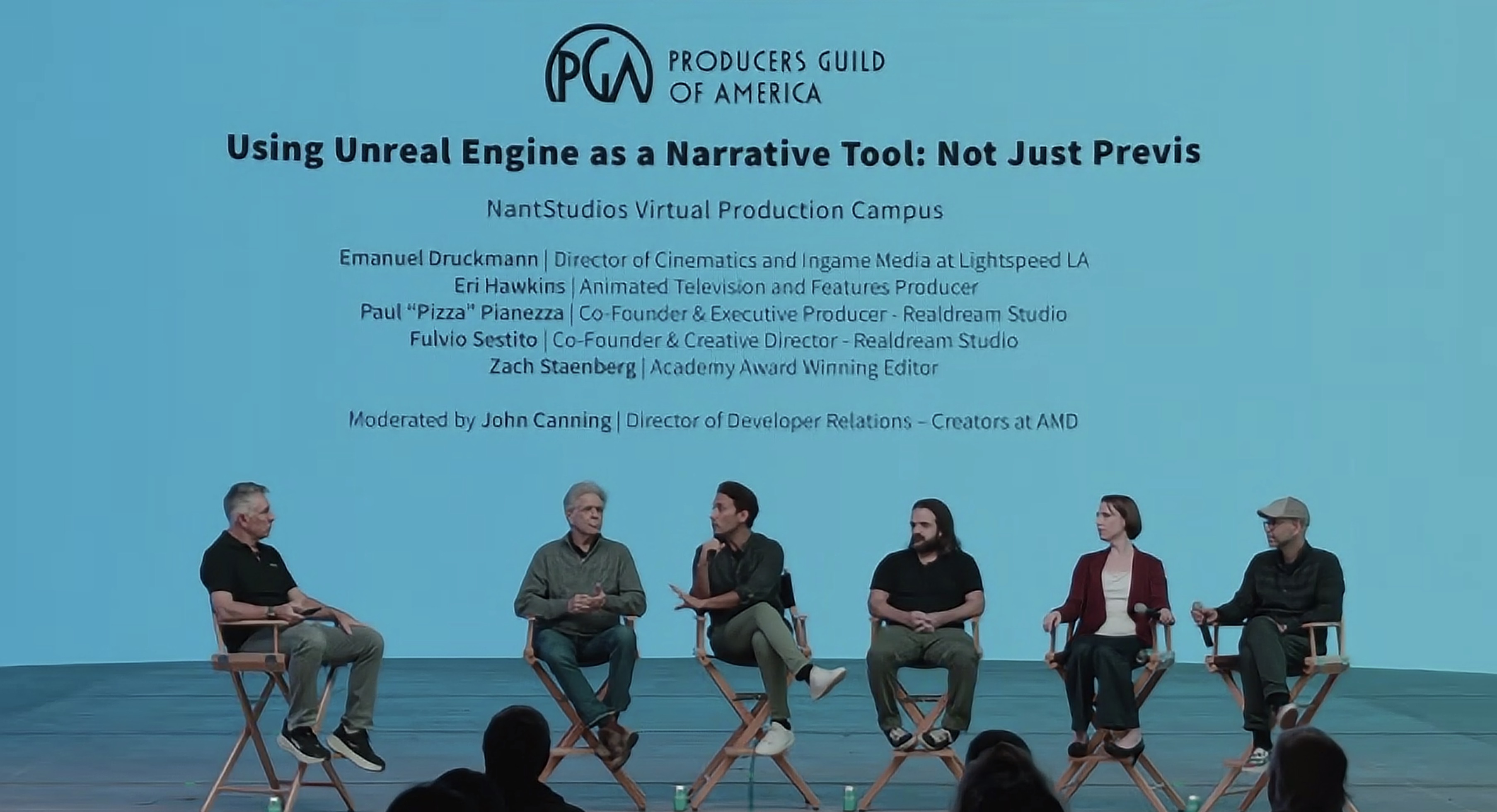

I watched a discussion tonight from Nant Studios for a PGA/VES panel on using Unreal Engine as a narrative tool. What made it worth the drive was they brought people who are deep in the trenches doing this work right now. Fulvio Sestito and Paul “Pizza” Pianezza from Realdream Studio, Eri Hawkins pioneering digital puppetry at Jim Henson Company, Emanuel Druckmann from Lightspeed LA working on AAA games, and Zach Staenberg, the Oscar-winning editor from The Matrix trilogy.

Post-Vis Saves The Killer During SAG Strike

Zach told this wild story about working on The Killer during the SAG strike. They were shooting in Paris and the actors had to leave, but the French crew was contracted for two more weeks. So they shot stunt performers doing wide shots and back-of-head footage. Then Fulvio showed up to the editing suite with a mocap suit, working solo. He built two blocks of Paris in Unreal based on the location. The director and editor could pick lenses, choose camera rigs, research what those rigs could do (height off the floor, movement range), and make adjustments in real-time. Same-day turnaround instead of waiting a week for traditional previs.

A Universal exec visited the set and saw the previs cut mixed with live-action footage. Asked “which is which” because he couldn’t tell them apart in the fast-paced editing. That’s when you know this isn’t just previs anymore but a different workflow that blurs the line between planning and final production.

Digital Puppetry Goes Real-Time

Eri’s work at Jim Henson Company is nuts. They’ve built this three-camera sitcom setup that’s fully digital - no live-action plate, no compositing. Motion capture performers do body work while Henson puppeteers perform the faces. Virtual cameras sit on pedestals and you’re shooting multicam. The performers see themselves as digital characters in real-time while they’re performing. Looks and feels closer to an animated show but directs more or less the same as live-action.

The newest development is real-time dynamic interactive fur; a project called “Monster Jam” where monsters are dancing. The fur reacts to the performers’ dance moves in real-time so the performers can see the fur reaction and incorporate it into their performance. Brian Henson said it reminded him of the excitement when his father was alive and pushing boundaries with puppetry.

The Double-Edged Sword of Endless Iteration

Eri dropped the truth bomb. The best thing and the most difficult thing about Unreal is exactly the same: you can iterate pretty much endlessly. Which raises the critical question “when do you make it stop.” Fewer external guardrails than traditional pipelines. You’re not sending work overseas and waiting three days before you can review it. Have to be way more intentional about pipeline management and communication or you’ll just keep tweaking forever.

Sometimes they deliberately render in grayscale to isolate what they’re reviewing. It forces people to focus on story instead of getting distracted by “why is it green” or other aesthetic details that aren’t relevant to the current feedback cycle.

Small Teams Punch Above Their Weight

Pizza told this story about helping friends win a commercial pitch. They asked for a simple animatic but Realdream brought it into Unreal. Instead of delivering one animatic they generated multiple creative options with different shots and different approaches. Same timeframe as a single traditional animatic would have taken. That’s the real unlock here. Small teams can produce what used to require massive studios by removing the friction from iteration. Focus on the narrative idea you’re trying to sell instead of getting bogged down in technical constraints.

When Not To Use It

They were refreshingly honest about limitations and when this approach doesn’t make sense. Small dialogue films don’t need this level of technical infrastructure. Not every movie benefits from real-time workflows. Fulvio learned the hard way that you need earlier collaboration with production departments. His line producer and AD saw the finished previs right before the Paris shoot started and said “this shot’s way too expensive for our budget.” Shows how creating really polished previs can backfire if you’re not aligned on practical constraints from the beginning.

The technical limitations are real too. Limited compositing capabilities, depth of field is post-process rather than physically correct, difficult to get clean mattes. Handles certain file formats well but terrible at others. Emanuel said it best when he described Unreal as its own ecosystem where “once you go Unreal, you can’t go outside of it” because it doesn’t talk well with other tools in traditional VFX pipelines.

The Quarry Blurs the Lines

For The Quarry, that choose-your-own-adventure horror game, they shot 33 hours of performance capture with 13 actors. Mini-series length content. The director had complete camera freedom while they looked at dailies the same way traditional film production does. The actors saw themselves as digital characters in actual game environments while performing. Weren’t thinking about this as a game production but rather as filmmakers who happen to be using a game engine.

Pizza’s vision is to work on a film and then have all those assets available in a game. Expand stories across media with interactive narratives using film assets. Universe expansion within the Unreal ecosystem. Fulvio’s interested in the deeper question of “what’s a game, what’s a film, what’s a cinematic” as projects continue to blur those boundaries in ways that challenge our traditional categories.

The Academy Doesn’t Know What To Call It Either

Someone making a short film in Unreal asked whether to submit it to the Academy as animation or short film; their answer was “it would be animation.” This shows how even the industry’s gatekeepers and award bodies are struggling with these blurred boundaries. The tools don’t care about our traditional silos and classification systems.

Where This Goes

Fulvio summed it up perfectly: “For a lot of us, we’ve been waiting almost a couple of decades for these two industries to kind of meet in the middle. I think we’re seeing now movie studios are adopting real-time technology, game people are thinking more cinematically, and I would love to see even more crossover.”

This convergence isn’t about cool technology for its own sake but about storytelling tools. You see results instantly in the viewport with no render-wait-review cycle. Creative decisions happen at the speed of thought.

The question isn’t whether to learn this workflow. When you’ll have time to catch up before it becomes table stakes for anyone working in narrative content creation.