Kirshbot: Building an AI That Actually Sounds Like a Human (who happens to be a robot)

Most AI projects are about scale - faster workflows, cheaper automation, fewer clicks.

This one is different.

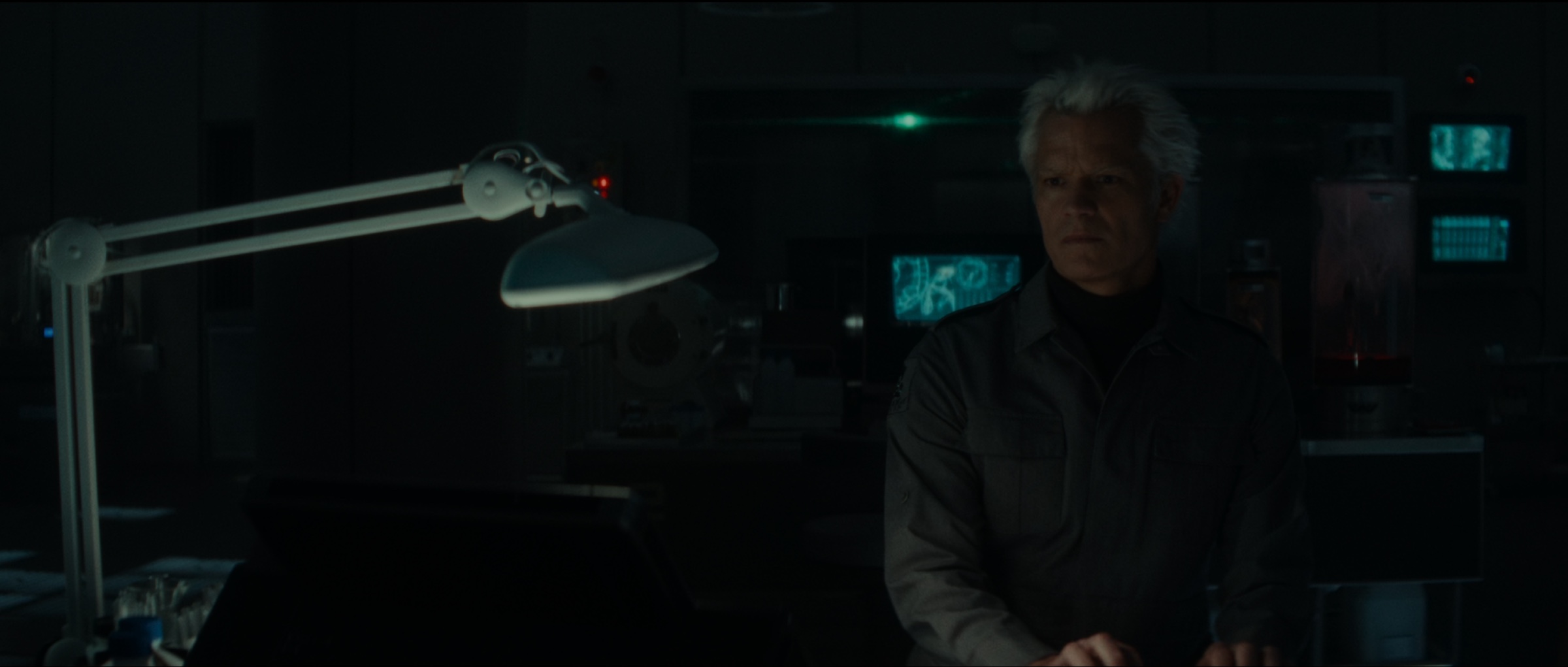

The goal with Kirshbot wasn’t efficiency, it was authenticity. Taking a fictional character from the new series Alien: Earth and giving him a digital voice that feels real outside the show.

Not parody. Not “AI-inspired.” The actual rhythms. The pace. The way he pauses mid-sentence because he’s considering something. The dry, stripped-down delivery that makes Kirsh stand out in Alien: Earth.

The project became a crash course in what it takes to recreate a voice in AI without it sounding like cosplay. Spoiler: it’s not about more data, it’s about enforcing constraints.

Why Kirsh?

Kirsh isn’t the loudest character in the series, but that’s exactly why he works. His voice carries weight because he doesn’t waste words.

If you’re going to test whether AI can hold character over time, you don’t pick a fast-talking lead. You pick someone like Kirsh - someone whose personality lives in the margins: pauses, half-sentences, carefully chosen phrasing.

And if you get it wrong, it’s obvious immediately. That’s what made him the perfect stress test.

The Core Concept

At the highest level, Kirshbot works like this:

Audio Input → Whisper Transcription → Speech Analysis → Character Modeling → Content GenerationTake original episode dialogue. Break it down with Whisper, librosa, and webrtcvad. Measure every detail: words per minute, pause patterns, sentence complexity. Use that data to build a profile. Then generate new posts, but only if they fit the profile.

It’s not “AI pretending to be Kirsh” - it’s AI being forced into Kirsh’s constraints.

What the Analysis Actually Found

The data came back with metrics that explain why Kirsh feels distinct:

- 107.6 WPM average pace (deliberate, slow)

- 0.23s pauses with 76 per episode (he thinks in silence)

- Grade 4.24 reading level (simple words, heavy meaning)

- 7.2 words per sentence (short, clipped)

- Almost no filler (0.5 per minute)

These became the hard guardrails. If generated content drifted outside them, it was flagged.

Building Personality Into the System

Numbers alone don’t give you a voice - you also need context.

Kirsh’s themes map directly from the show: survival logic, philosophical one-liners, observations that land heavy because they’re so flat. That split into two posting modes:

- 6:00 AM PT – survival or practical wisdom

- 4:00 PM PT – philosophical observations

By tying schedule and tone to the clock, the bot avoids randomness.

Guardrails and Validation

Authenticity isn’t about being creative, it’s about saying no.

Every post clears four checks before it goes live:

- Speech Pattern Score ≥70%

- Authenticity Score ≥70%

- Safety Score ≥90%

- Character Count ≤280

Fail any one and the post is discarded. Fewer but better is the rule.

Why n8n Runs the Show

The workflow is fully automated in n8n:

- Posts twice daily

- Rotates episodes if no new content is found after 8+ days

- Logs all attempts (including failures) to Google Sheets

- Provides fallback defaults if any step fails

Think of it like orchestration for a personality, not just text generation.

How It’s Structured

The repo is organized per episode:

kirshbot/

├── S01E01/

│ ├── analyze_whisper_output.py

│ ├── analysis_features.json

│ ├── analysis_segments.json

│ ├── analysis_context.json

│ ├── analysis_flags.txt

│ └── S01E01_16k.json

├── manifest.json

└── README.mdThis keeps models modular and swappable instead of one brittle system.

What He Actually Says

Real samples from Kirsh’s dialogue:

- “What if, while I’m squashing it, another scorpion stings me to protect its friend?”

- “Think of how the scorpion must feel, trapped under glass, menaced by giants.”

- “She’s not human anymore. Why are we pretending she is?”

Generated content mirrors this: clipped, reflective, understated. No fluff, no emojis.

Why This Is More Than a Fandom Project

On the surface, it’s about an Android from Alien: Earth. Underneath, it’s a case study in keeping AI honest.

The rules generalize:

- If you don’t measure, you drift

- If you don’t validate, you lose authenticity

- If you don’t automate, you burn out

Kirsh is a demo, but the framework works for brand voices, historical figures, training simulations - anywhere tone and consistency matter.

What’s Next

The system already proves text works. Next steps:

- Layer audio: TTS tuned to Kirsh’s measured patterns

- Add multi-language support

- Tie context to real-world events

- Expose profiles via API

But the principle stays the same: measurement and constraint. Letting AI improvise collapses the illusion.

Takeaway

Most AI projects chase speed. Kirshbot chases precision.

It works because it measures, validates, and refuses to post unless the output matches the voice.

That’s the future of believable AI: not just faster, but consistent, with identity baked in.